Taking AI Offline: Lauren Lichtman & Navya Akkinepally Build Tools for Education

Meet the leaders who are putting AI to work for good. Humans of AI for Humanity is a joint content series from the Patrick J. McGovern Foundation and Fast Forward. Each month, we highlight experts, builders, and thought leaders using AI to create a human-centered future — and the stories behind their work.

What if the most powerful AI tools were in reach for the people who could benefit from them most?

For the 2.6B people still offline, AI tools to support learning often aren’t an option. Navya Akkinepally and Lauren Lichtman are working to change that. As co-executive directors of Learning Equality, they’re building AI-driven tools that work anywhere — even in the most disconnected classrooms.

Navya used to teach in a low-income school in India, where she saw firsthand how limited resources held her students back. Lauren worked in global development, where she witnessed how inclusive learning opportunities could change lives. Now, they’re making AI work for the educators and students who could benefit from it most — whether that’s automating curriculum organization, co-designing lesson plans, or supporting teacher training.

The two agree that AI shouldn’t widen the learning gap; it should close it. In this conversation, they share how their journeys led them to AI, why offline-first solutions are critical, and what it takes to build AI tools that serve all learners.

How did your journey inspire you to explore AI for humanity?

Navya: My journey in education has always been about expanding human agency, ensuring that learners, regardless of their background, have the tools and opportunities to shape their futures. As a teacher in a low-income school in India, I saw firsthand how bright, capable students — especially girls — were held back by lack of resources, social expectations, or digital exclusion. Their potential often remained untapped. However, I also saw first-hand the positive impacts of great teaching and community engagement. Applying those learnings at scale is what drives me to do this work every day. Technology isn’t a silver bullet, but it can be a great enabler for impact at scale.

Lauren: For me, it started at home. I grew up in New York and saw disparities everywhere. I saw how policies impacted learners differently — from funding to districts to state test questions. Now, as a parent of a kindergartener, I’m seeing different approaches to using tech in the classroom. I want to ensure it’s done meaningfully, with equitable access as a key factor in decision-making. It’s experiences like these that inspire me to explore AI for humanity. Tech, when used thoughtfully, has such great potential to be an equalizer, and AI is no different. It’s an incredible tool we’re learning to harness for good.

At Learning Equality, we’re addressing these challenges by developing offline-first AI tools that empower educators and students to take control of their learning. AI shouldn’t dictate rigid pathways — it should be a creative accelerator, helping educators make informed decisions and allowing students to explore knowledge in ways that resonate with them. We both feel that when AI is designed with and for disconnected communities, it can open up a world of possibilities.

"AI has the potential to bridge learning gaps, but only if it is intentionally designed to expand access rather than reinforce exclusion."

How can AI be leveraged to address disparities in access to education?

Lauren: AI has the potential to bridge learning gaps, but only if it is intentionally designed to expand access rather than reinforce exclusion. For the 2.6B people still offline, traditional AI-powered educational tools that rely on connectivity risk leaving them further behind.

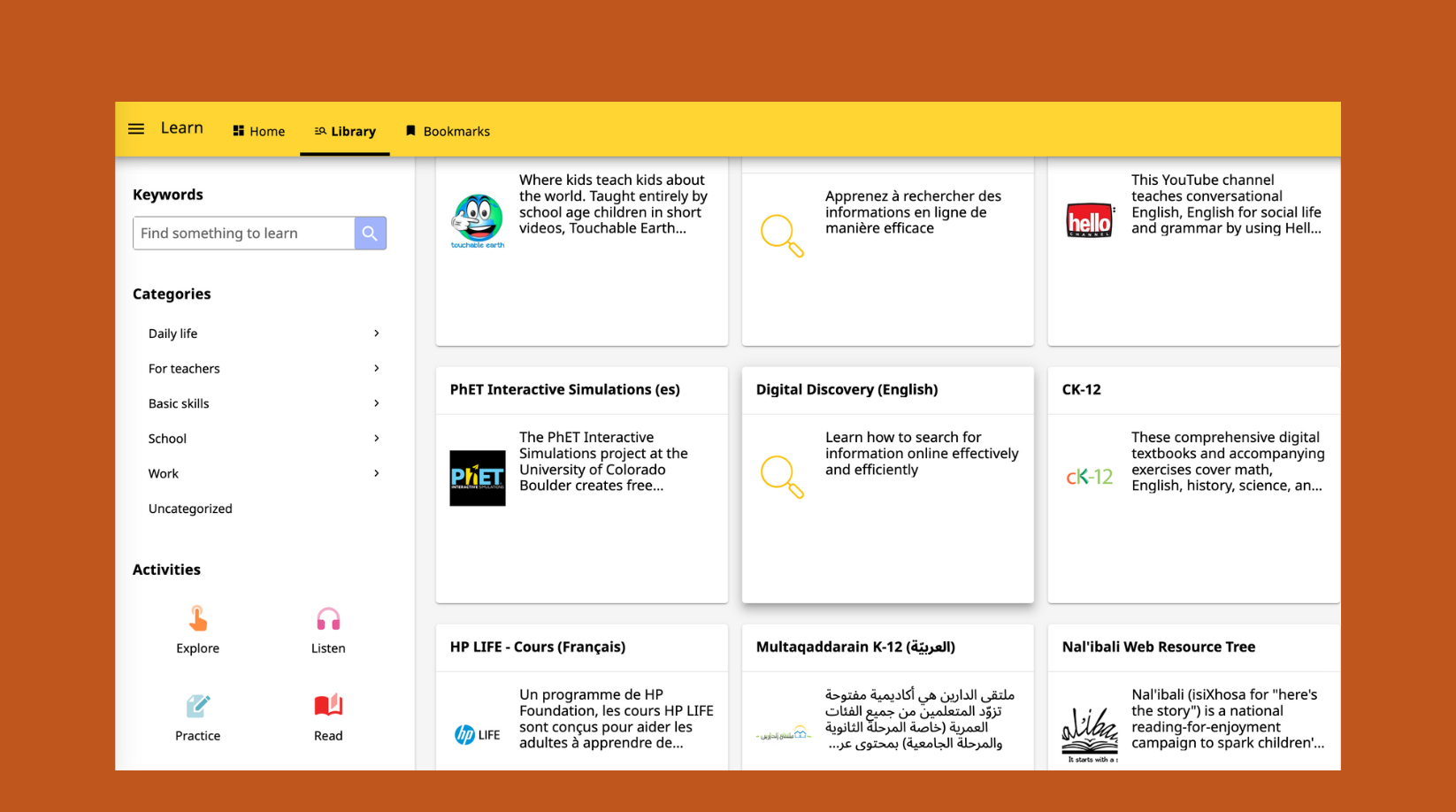

At Learning Equality, we use AI to automate the heavy lifting for educators, curriculum designers, and program specialists in low-resource settings. Our AI tools focus on “back office” tasks, allowing teachers and learners to benefit even if they don’t interact with the AI directly. We’ve started by automating the way learning resources are made available for teachers (think classroom activities, math problem sets, interactive simulations) based on the curricular standards they’re asked to follow. This is particularly important in low-resource settings where learning materials may be limited. By using free, open educational resources, we can help bridge this gap. As generative AI has improved, so has our ability to more fully automate parts of this process. We’re also exploring ways to co-design lesson plans with teachers using AI in low-resource classrooms.

Navya: We’ve always focused on tackling education disparities. Our offline-first platform, Kolibri, already brings high-quality, curriculum-aligned content to students and teachers in remote schools, rural community centers, and refugee camps. This year, we are leveling up and putting AI directly into the hands of teachers and learners so they can benefit from these tools directly.

We’re excited to develop AI-powered tools that support teacher learning and coaching, helping shift teaching practices to be more student-centered. Our research shows that training teachers in student-centered practices improves student outcomes. However, traditional training approaches are hard to scale efficiently. A mix of in-person training and ongoing AI-assisted support can scale these approaches affordably.

To leverage AI equitably, it must be designed in partnership with local communities, reflecting their linguistic, cultural, and pedagogical needs. Otherwise, AI risks becoming another tool that dictates outcomes rather than empowering learners and teachers.

What support is needed for educators and organizations to adopt AI technologies?

Navya: At one of our educator training workshops a few years ago, we asked participants what barriers they face daily. One trainer shared that their school had five different educational intervention programs running simultaneously. So adding another edtech intervention was a lot of work and created so much additional labor. This was an important need to recognize, and it reinforced that we must meet teachers where they are and support them in gaining digital and AI literacy skills. At the same time, AI adoption isn’t just about introducing new tools — it’s about ensuring educators have the training, agency, and confidence to integrate AI in ways that genuinely support learning.

For AI to be truly equitable, educators must be involved in its design and implementation. At Learning Equality, we work directly with teachers to develop AI-driven tools that support, rather than disrupt, their classrooms. For example, AI can suggest teaching strategies right at the moment they’re needed, align thousands of resources with local curricula in days instead of months, and provide insights on student progress, freeing up educators to focus on mentorship and engagement.

Learning Equality's Kolibri platform

What core values drive your unique vision for impact in an AI-driven future?

Lauren: Our organization is guided by five core values that shape our decision-making and hold us accountable. These core values ensure our actions align with the communities we serve. The same core values drive our vision for an AI-driven future. A truly human-centered AI future isn’t one where learners passively consume knowledge, but one where they have the tools, confidence, and opportunities to shape AI itself.

As we strive for equity, we want to ensure that AI expands access rather than limits it. We learn constantly, recognizing that meaningful change requires iteration, adaptation, and listening to the communities we serve. We also continue to learn how AI can improve our internal processes to enhance our work as an AI-powered nonprofit. We believe in relationships, which is why we co-design AI tools directly with the communities we serve and prioritize their voices. Through openness, we believe in the importance of sharing our knowledge freely and aim to share data and tools where possible. Most importantly, we lead with empathy — valuing lived experiences, engaging diverse perspectives, and designing AI that serves real human needs. We don’t apply AI just because it sounds right or it’s new and shiny, but because it can be a useful tool.

Which visionary leaders, philosophies, or movements give you hope for a more human-centered AI future?

Both: Paulo Freire. Jinx!

Navya: As we explore student-centered learning and the shift toward this approach in low-resource contexts through edtech, we often come back to Freire’s critical pedagogy work. His philosophy sees education as a tool for questioning power structures and expanding agency. Paulo Freire deeply inspires me — not only in how I think about education, but in how I imagine AI’s role in supporting human dignity and liberation. His vision of education was collaborative, rooted in the lived experiences of learners, and designed to empower. That same ethos must guide how we design and deploy AI tools, especially in contexts with limited connectivity, resources, and choice.

Freire reminded us that education is never neutral; it either reinforces the status quo or becomes a practice of freedom. Similarly, AI is not inherently equitable — it reflects the values of those who shape it. So when we build AI to support student-centered learning in low-resource settings, I ask: does this tool help learners and educators “read and write” their world, as Freire would say? Does it help them question, imagine, and create? Freire’s work pushes me to ensure that AI in education isn’t about standardizing instruction, but about cultivating possibility — even, and especially, offline.

Lauren: Like Navya, as a student of peace education, I continually reflect on Freire’s work to ensure that we use tech to uplift and empower. His ideas also inform our commitment to open knowledge and open source, which we see as essential to promoting global access and human-centered design. We believe deeply in the importance of open source because it allows others to build on top of our tools. It also enables us to reach more disconnected communities that would otherwise be limited by restrictive licenses or internet-dependent technologies.

We want to shift power in AI and tech development so that AI consumers can also be the ones who develop it. This belief is rooted in the idea that knowledge should be co-created and that tools should be built with, not for, communities. It directly aligns with Paulo Freire’s vision of education as a liberatory practice — one where learners are not passive recipients, but active authors of their realities.

That’s why open-source development and transparency are essential to our work. When educators in low-resource settings can access, adapt, and even remix AI tools to fit their context, it shifts the power dynamic. It turns AI from a top-down intervention into a bottom-up, participatory process. That’s the kind of human-centered AI future I believe in — one where the tools don’t just serve people, but are shaped by them.

What is your 7-word autobiography?

Navya: Facilitator. Mother. Educator. Hopeful for meaningful change.

Lauren: Connector, strategist, advocate — building bridges for equity.

Stay tuned for next month’s Humans of AI for Humanity blog, featuring Jacaranda Health Co-Executive Director Sathy Rajasekharan. For more on AI for good, subscribe to Fast Forward’s AI for Humanity newsletter and keep an eye out for updates from the Patrick J. McGovern Foundation.